As might be obvious these days, publishing a study on wearables is the fashionable thing to do. There seems to be a new major (or at least noticed in the mainstream media) wearable study published every month. Sometimes more often. And that ignores the likely hundreds of smaller and never picked up wearable studies that probably occur each year in all manner of settings.

And in general – these studies are doing good work. They’re aiming to validate and hold accountable manufacturer claims. That’s always a good thing – and something I aim to do here as well. Many times these studies will focus on a specific claim – such as heart rate accuracy, or step accuracy. As those are generally somewhat easy to validate externally through a variety of means. In the case of steps it can be as simple as manually counting the steps taken, and in the case of heart rate it may be medical grade systems to cross-reference to.

All of which are 100% valid ways to corroborate data from wearables.

Except there’s one itty bitty problem I’m seeing more and more often: They’re often doing it wrong.

(Note: This isn’t the first time I’ve taken a supposedly scientific study to task, you’ll see my previous rendition here. Ironically, both entities screwed up in the same way.)

Why reading the manual matters

Most studies I’ve seen usually tackle 5-7 different devices to test. Almost always one of these devices is an Apple Watch, because that has mainstream media appeal. Additionally, you’ll usually find a Fitbit sensor in there too – because of both mainstream media interest as well as being the most popular activity tracker ever. After that you’ll find a smattering of random devices, usually a blend of Mio, sometimes Garmin, sometimes Polar, and then sometimes totally random things like older Jawbone or others.

From there, devices are tested against either medical grade systems, or against consumer grade systems. The Polar H7 strap is often used. Which while not quite as ideal as medical grade systems, is generally a good option assuming you know the obvious signs where a strap is having issues (remember – that’s still a very common thing).

But that’s not what’s concerning me lately.

(Actually, before we go forward – a brief aside: My goal isn’t to pick on this Stanford study per se, but that’s probably what’s going to happen. I actually *agree* with what they’re attempting to say in the end. But I don’t agree with how they got there. And as you’ll see, that’s really important because it significantly alters the results. Second, they’ve done a superb job of publishing their exact protocol and much of the data from it. Something that the vast majority of studies don’t do. So at least they’re thorough in that regard. Also, I like their step procedure for how they are testing it at different intensities. One of the better designed tests I’ve seen.

Next, do NOT mistake what I’m about to dive into as saying all optical HR sensors are correct. In fact, far from it. The vast majority are complete and utter junk for workouts. But again, that’s not what we’re talking about here. This is far more simplistic. Ok, my aside is now complete.)

Instead, what’s concerning me lately is best seen in this photo from the Stanford study published last week (full text available here):

(Photo Credit: Paul Sakuma/Stanford)

As you can see, the participant is wearing four devices concurrently. This is further confirmed within their protocol documents:

“Participants were wearing up to four devices simultaneously and underwent continuous 12-lead electrocardiographic (ECG) monitoring and continuous clinical grade indirect calorimetry (expired gas analysis) using FDA approved equipment (Quark CPET, COSMED, Rome, Italy).”

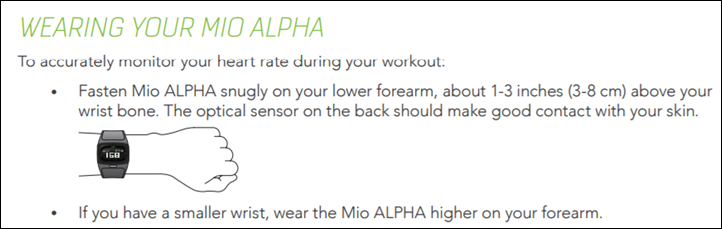

First and foremost – the Mio Alpha 2 on the left wrist (lower one) is very clearly atop the wrist bone. Which is quite frankly the single biggest error you can make in wearing an optical HR sensor. It’s the worst spot to wear it. Don’t worry though, this is covered within the Mio Alpha 2 manual (page 6):

But let’s pretend that’s just a one-off out of the 60 participants when the camera came by. It happens.

The bigger issue here is them wearing two optical HR sensor devices per wrist (which they did on all participants). Doing so affects other optical HR sensors on that wrist. This is very well known and easily demonstrated, especially if one of the watches/bands is worn tightly. In fact, every single optical HR sensor company out there knows this, and is a key reason why none of them do dual-wrist testing anymore. It’s also why I stopped doing any dual wrist testing about 3 years ago for watches. One watch, one wrist. Period.

If you want a fun ‘try at home’ experiment, go ahead and put on one watch with an optical HR sensor. Now pick a nice steady-state activity (ideally a treadmill, perhaps a stationary bike), and then put another watch on that same wrist and place it nice and snug (as you would with an optical HR sensor). You’ll likely start to see fluctuations in accuracy. Especially with a sample size of 60 people (or 120 wrists).

I know it makes for a fun picture that the media will eat up – but seriously – it really does impact things. Similarly, see in the above picture how the Apple Watch is touching the Fitbit Blaze? That’s also likely impacting steps.

Another fun at-home test you can do is wear two watches side by side touching, just enough so while running on a treadmill they tap together. This can increase step counts as a false-positive.

Which isn’t making excuses for these watches. But it’s the simple reality that users don’t wear two optical HR sensor watches in the real world. But honestly, that’s probably the least of the issues with this study (which is saying a lot, because at this point alone I’d have thrown out the data).

In case you’re wondering why they did this – here’s what they said:

“1) We wanted to check for any positional effects on the watch performance –i.e. does right vs left wrist matter? Does higher or lower on the wrist matter? So watch arm & position was randomized (see supplementary tables in manuscript).

2) We are more confident in results obtained from same workout rather than separate workouts for each device.

3) Purely practical — having the same subject perform the protocol 4 – 7 times is infeasible. It would have been challenging to get compliance in a sufficient number of subjects.”

I get that in order to reduce time invested, you want to take multiple samples at the same time. In fact, I do it on almost all my workouts. Except, I don’t put two optical HR watches per wrist. It simply invalidates the data. No amount of randomizing bad data makes it better. It’s still bad data.

And when we’re talking about a few percent mattering – even if 1 out of 5 people has issues, that’s a 20% invalidate data rate – a massive difference. There’s no two ways about it.

Let’s Talk Fake Data

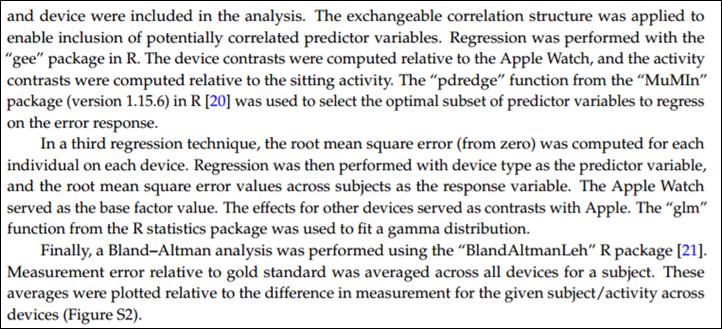

Another trend I see over and over again is using one-minute averages in studies. I don’t know where along the way someone told academia that one-minute sport averages are acceptable – but it’s become all the rage these days. These studies go to extreme degrees on double and triple regression on these data points, yet fail to have accurate data to perform that regression on.

Just check out the last half of how this data was processed:

Except one itty-bitty problem: They didn’t use the data from the device and app.

Instead, they used the one-minute averages as reported by various methods (most of which aren’t actually the official methods). For example, here’s how they accessed the Mio Alpha 2:

“The raw data from the Mio device is not accessible. However, static images of the heart rate over the duration of the activity are stored in the Mio phone app. The WebPlotDigitizer tool was utilized to trace over the heart rate images and to discretize the data to the minute level.”

Translation: They took a JPG image screenshot and tried to trace the image to determine the data points.

Pro Tip: They could have simply connected the Mio Alpha 2 to any phone app or any other watch device to gather second by second HR data via the rebroadcasting feature. After all, that’s kinda the main selling point of the Mio Alpha 2. Actually, it’s almost the only selling point these days.

Or here’s how they did the Microsoft Band:

“The mitmproxy software tool [15] was utilized to extract data from the Microsoft Band, following the technique outlined by J. Huang [16]. Data packets transmitted by the Microsoft phone app were re-routed to an external server for aggregation and analysis. Sampling granularity varied by activity J. Pers. Med. 2017, 7, 3 5 of 12 and subject. In cases where multiple data samples were collected each minute, the last data sample for the minute was utilized in the analysis.”

So, let me help you decode this: They didn’t use the actual data recorded in the app, but rather, they picked data at one-minute intervals in hopes that it’d represent what occurred in the previous minute. Yes, the Microsoft app sucks for data collection – I agree, but this isn’t an acceptable way to do deal with such suckiness. You don’t throw away good data.

Or, here’s how they did the Apple Watch data:

“All data from the Apple Watch was sent to the Apple Health app on the iPhone, and exported from Apple Health in XML format for analysis. The Apple Health app provided heart rate, energy expenditure, and step count data sampled at one minute granularity. For intense activity (running and max test), the sampling frequency was higher than once per minute. In cases where more than one measurement was collected each minute, the average measurement for the minute was utilized, since the minute average is the granularity for several of the other devices.”

So it gets better in this one. They acknowledge they actually had the more frequent data samples (they’d have had 1-second data samples), but decided to throw those out and instead average at the minute.

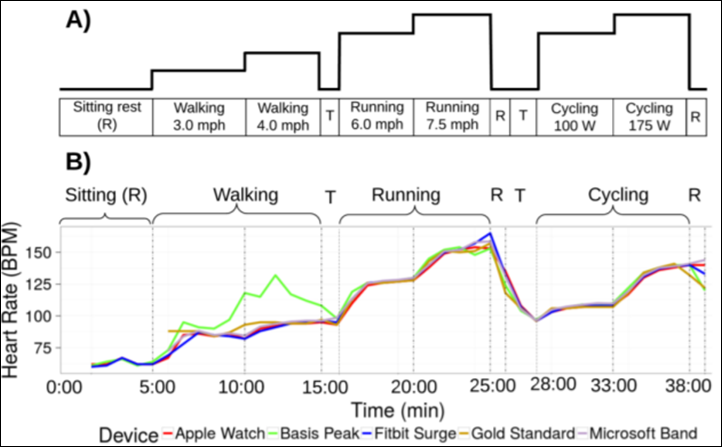

But what’s so bizarre about this is how convoluted this study attempt was when it came to collecting the data. Remember, here’s roughly what each participant did:

So you see what are effectively three and a half sports here: Walking, Running, Cycling, and…Sitting.

That’s fine (I like it actually as I said before). There’s complete validity in testing across all three and a half things. But where the mistake was, is trying to treat it as a single entity and record the data streams live. They skip over in the study procedure documents whether these devices were even switched between running and cycling mode for example. None of the devices they tested were multisport devices. So did the participant stop and start new activities? The graphs above certainly don’t show that – because doing so on most of these devices isn’t super quick.

None of which explains the most obvious thing skipped: Why not use the activity summary pages from the apps?

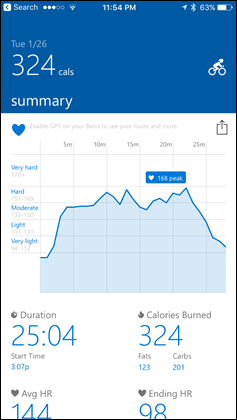

Every single one of the devices they tested will give you a calorie total at the end of the activity. Here’s a few pages from these respective devices that show just that (left to right: Fitbit, Apple Watch, Microsoft Band):

Calories is prominently displayed for these workouts on all three of these apps. This is the number they should have used. Seriously. Companies make this pretty little page so that every one of us on this planet can easily figure out how much ice cream we can eat. It’s what 98% of people buy activity trackers for, and in this case they didn’t use the one metric that the entire study is based upon.

Instead, they tried to triangulate the calories using minute averaged data. Which…isn’t real data anymore. It’s alternative facts data. And thus, you get inaccuracies in your results.

I went back to them and asked about this too, here’s why they didn’t use the totals in the app:

“This is a good idea, but unfortunately per-workout totals are reported as a sum of calories for a given workout. We were instead interested in per-minute calorie expenditure, which would not be reported in the per-workout summary. The reason for our interest in the per-minute values is that there is some adjustment as a person transitions from one activity to another (in both heart rate and energy expenditure). Consequently, in the 5 minute protocol for each activity, we used the energy expenditure and heart rate for the final minute of the protocol (to ensure that a “steady state” rather than transient measurement was obtained).”

I get what they are saying – but again, that’s not giving an accurate picture of the calorie burn. Instead, it’s only looking at the *average* of the one minute per each protocol chunk. I’m not sure about you, but I don’t typically throw away my entire workout, save the last minute of it. Also, by focusing on a single minute’s worth of data, it serves to exaggerate any slight differences. For example, if you take one minute where one unit may be off 1-3 calories, but then multiply it out over a longer period – it exaggerates what didn’t actually happen. We don’t know what happened in those other minutes, because they were thrown away.

And that all assumes they got the right numbers (for example, the JPG graph conversion is notoriously easy to get wrong numbers from).

Note: I did confirm with them that they configured each device within the associated app for the user’s correct age/gender/weight/etc as supported by that individual device. So that’s good to see, a lot of studies skip this too – which also would invalidate the data by a huge chunk.

Wrap-up:

Early on in the study, they state the following:

“All devices were bought commercially and handled according to the manufacturer’s instructions. Data were extracted according to standard procedures described below.”

The only thing likely true in this statement was that all devices were bought commercially. After that, nothing else is true. The devices were not handled in accordance with manufacturer’s instructions. Further, the data was not extracted according to manufacturer’s intent/instructions. And then to imply the methods they used were ‘standard’ is questionable at best. The standard method would be to just look at the darn activity pages given on every single app out there. Did the calories match? It’s really that simple for what their goal was.

Instead, they created a Rube Goldberg machine that produced inaccurate results. Which is unfortunate – because I actually agree with the theory of what they’re saying: Which is that every company does something different with calories. Unfortunately, they didn’t prove that in this study. Instead, they proved someone didn’t read the manual on how to use the devices.

Which ironically is exactly the same problem the last time I dug into a study. The folks in question didn’t use the products properly, and then misunderstood how to analyze the data from it. No matter how many fancy algorithms you throw at it, crap data in means crap data out.

Once again, you have to understand the technology in order to study it.

Sigh.

Update 1 – June 12th, 2017:

Over the last few days, I’ve received additional information that’s added more doubt and concern about the study. Beyond those errors I identified above, it’s come to light a significant error was made by the researchers when it came to the PulseOn device included in the testing. Back in November 2015, the authors of the study approached PulseOn to see about ways to get the raw HR data from the PulseOn unit. PulseOn then agreed to provide them a special internal firmware version to gather that data. However, that firmware version was only specified for HR data, and not calorie data.

In fact, it didn’t calculate calories at all – as it didn’t include the heart rate FirstBeat algorithms/libraries (which is what PulseOn uses, like a number of other companies such as Garmin, Huawei, etc…). Instead, in that internal version it had a data field which was titled “wellness – EnergyExpenditure”. But that’s not what’s exposed to the consumer. See, that specific field doesn’t include any HR data, but rather only has motion-based calorie metrics when the HR sensor isn’t used. In other words – it’s useless and non-visible data since it’s not yet paired in the algorithms with the HR calorie data.

Unfortunately, the study’s authors used the wrong data field – which led to dramatically wrong calorie results (as seen in the study). Had the correct calculations been used it’d be an entirely different story. And again – as I pointed out above, this was a case of trying to out-smart the default app data points that’s normally given to a consumer. When you do so – you start to make up data points that don’t exist.

Finally – the authors have confirmed to PulseOn since publishing that they used the incorrect data field. It’s unknown whether or not they’ll post a correction or errata to the paper.

FOUND THIS POST USEFUL? SUPPORT THE SITE!

Hopefully, you found this post useful. The website is really a labor of love, so please consider becoming a DC RAINMAKER Supporter. This gets you an ad-free experience, and access to our (mostly) bi-monthly behind-the-scenes video series of “Shed Talkin’”.

Support DCRainMaker - Shop on Amazon

Otherwise, perhaps consider using the below link if shopping on Amazon. As an Amazon Associate, I earn from qualifying purchases. It doesn’t cost you anything extra, but your purchases help support this website a lot. It could simply be buying toilet paper, or this pizza oven we use and love.

Better designed testes?

Figures, I make one change to my draft in that section and screw it up. At least my paragraphs have balls..

Big Balls at that!

Us academics usually joke about how nasty reviewer 2 (or 3) is. Those authors survived the review process. Then comes Ray…

You should get in contact with the journal’s editor and publish this as a comment on the original paper. That might help to distribute your important methodological concerns in the field.

+1

If you want to see changes in academia, put your well articulated comments in peer-review.

Great job calling out garbage science.

Completely agree with this. I moonlight as a journal editor (humanities) and I would be delighted if someone sent me a response piece. Please do get in touch with the editors!

?Is RTFM a methodological concern?

Ray does a great job. but if you showed that same picture of the MIO being worn on the wristbone to A LARGE PROPORTION of Ray’s readership they would all have made the same comment.

so we are talking both a lack of knowledge about their supposed area of expertise as well as the inability to RTFM.

+ 2

The way to get issues you identified addressed, is to send a letter to the editor of the underlying journal pointing out the study deficiencies. Because besides the study authors not understanding the problems in their study, apparently the reviewers didn’t either.

In defence of their protocol, I bet 99% of users don’t read the manual either, so using it incorrectly is probably reflective of real life performance.

“Study proves that misused wearables provides inaccurate data” – Not sure how useful that would be!

In defense of not reading the manual, I just read Ray’s write up of whatever I buy. Seems better and easier than the manual.

Seems like they tried to figure out what is the most complicated way they could create a study for something that isn’t really that complicated. They really didn’t seem to have any understanding how these devices work in the real world or what the raw data provided really means.

But boy did all their process descriptions sound sophisticated.

Good job Ray.

Has there been any response from them since you’ve published this?

I haven’t. Though, it’s still only 10AM in California.

Also, only have one of each device means they really didn’t have a valid sample size per device. Compound that with the fact they probably didn’t do an analysis of the fitness level of each of the volunteers (at least for Garmin, you need to be realistic with the survey on activity level for the calorie counts per activity to be realistic) means they added in some pretty massive variables.

I’m interested to see if the folks at Stanford respond and (hopefully) work with you on how to make their study accurate and relevant.

Ray makes many good points. Still, it is interesting that the researchers found HR was reasonably accurate, while calorie burn was pretty far off. That suggests to me the calorie burn issue was not caused by the use of multiple devices or the use of a narrow slice of calorie burn data. (Though I guess using just a slice of calorie data would matter if the device calculates total calories by some method other than adding together the slices of data.)

Thanks for the effort (and experience) you’ve put into this debate. It’s crucial for those that take self monitoring seriously. It’s useless (for me) if the HR data is unreliable.

“In case you’re wondering why this did this – here’s what they said:”

Wondered if it was autocorrect error (they did this) maybe…..

Nice article Ray. The downfall of science will be bad science like this. Too much garbage out there and people just read the headlines.

If you do take this further, I briefly read the study and for this sort of experimental design with multiple watches on one arm, it is more than a little crazy that they didn’t include a blocking variable. Professor Hastie should know better and if time was a problem a balanced incomplete block design could have worked. I can almost guarantee you he only saw the data after the experiment and they forgot to record the position of the watch so there was nothing he could do.

I believe the study included a variable to reflect the position of the device (anterior vs posterior). As far as I can tell, they didn’t test for two devices vs one, but their overall finding that HR was reasonably accurate suggests the presence of two devices did not cause significant error.

Out of curiosity regarding the issue dual wrist testing.

Do I understand correctly that two optical HR watches on the same wrist is a problem since both need to be snug, but having a non-optical HR watch and an optical HR one on the same wrist/forearm is fine so long as the watch without optical HR is worn a bit loose?

I’d also like to know the answer to this as I sometimes wear my Mio Link and Polar M400 right next to one another. I haven’t noticed any significant difference in HR data compared to one on each wrist/forearm.

Mostly yes, but a little bit no…

If you need or want to wear two watches on one wrist, you should wear the non-OHR device at the low position. Tightness-wise wear that however you like, but not so loose that it can contact the other watch. Another source of error in the Stanford study would be from the watches contacting each other.

If the non-OHR device was worn in the “Higher” position and tightly you could block some of the blood flow to the OHRM.

Exactly what Sneff said.

And I didn’t get into the whole touching thing too much here – but it’s definitely true. Both in terms of OHR (since touching can fake cadence which could override HR), as well as steps (since it fakes cadence).

One has to keep in mind that the watch is picking up very tiny signals in the grand scheme of things to determine HR. Cadence is something that first has to be filtered out, but then if it’s getting a secondary whack to the side of the heat (unit) each bounce, that starts to quickly look like a HR.

It would take me 3 months, 8 hrs a day to write an article like this. Well Done Ray!

Casey

thanks for calling them out on this Ray, i’d also love to see you get this published alongside the study.

there is so much so-called “science” that is complete garbage and obviously so to anyone with a common understanding of the subject (let alone a genuine expert like you), the supposed “scientists” need to be held to account and shamed whenever they publish such poor work.

Or maybe your ego is a tiny little bit bruised because no one is asking you to get involved in these studies when you are the self-proclaimed authority in this field? There is a process to scrutinize scientific research and it’s called peer review which involves scientists the last time I checked, not tech bloggers that make coin on clicks. If you strive to become a respected and published researcher then you may have to leave your cave at some stage and not just shout out from your high virtual horse whenever you so please without subjecting yourself to the same rules and processes.

I know this will likely get me a lot of flak here but I am just getting more and more annoyed by the general lack of respect and humility here. I was once a fan but something got lost since the Runner’s World’s 50 Most Influential People in Running piece followed by your full-time commitment. Now, bash away folks…

I don’t see this as an ego trip. Ray raises valid points that peer review obviously failed to adress. If anything, this is a remarkable example to suggest that peer review is too focused on statistical rigour, and that review teams might benefit from replacing one of the three academic insiders with an outside expert. Will only work if peer reviews are paid, but we’re discussing this anyways.

You are completely right. Scientific publishing became a matter of politics and friends.

Scientists dont have a clue here… sorry you felt hurt and compelled to try and defend them

You need to be a practitioner in the field as well (i.e a coach or an athlete) before you can run credibly any kind of experiment of these kind. A-la Steve Magness…. Till then FAIL…

That’s the key. Ray has very specialized knowledge about this that most of the rest of us, including obviously the authors, don’t. How many people own multiple devices with OHR and want to use more than one at once? (I own one, and I don’t pay any attention to the numbers. When I care about the numbers, I wear a chest belt.) They were just trying to get the data for this with as little time/effort as possible, and screwed up because they didn’t know. Obviously neither the authors or reviewers read DC Rainmaker, and it’s quite likely they don’t ordinarily use wearables. And I’ll bet their readership numbers spiked yesterday :-)

Ingo-

I don’t automatically grant some magical level/assumption of authority to someone who has a fancy title in front of/behind their name. It doesn’t matter if that’s CEO, PHD, or PEON. You have to prove that you know your subject and that you’ve done that subject justice. I love people who know their shit, even if I disagree with their stance (though, I generally find that when people know their shit inside and out, I rarely disagree with them in this field.)

Assuming someone with a title is always right is exactly how this study got through its various reviews. People looked at titles and assumed other people knew what they were doing correctly. Clearly not.

If there’s something that you believe I missed above in the analysis – I’m happy to revisit it. But at this point a boatload of people around the wearables community have chimed in, both here, Twitter, and e-mail and seemed to agree with me (actually, nobody has disagreed with me).

I’m pretty sure this post is done in a pretty respectful way, especially given the entity in question went out of their way to contact various media/press outlets to get attention for their study upon release. When you seek attention, you get the spotlight along with it.

As for this peer review process – time and again, it’s failed. It doesn’t always fail, but it failed here, and it’s failed horribly on other studies I’ve evaluated. So perhaps that process isn’t as valid as it used to be. Or maybe isn’t not valid for this sector. Either way, until that changes, I guess us lowly tech bloggers in a cave are going to have to hold those with fancy titles accountable.

Cheers.

Ingo’s comments are quite amusing. Some academics claim to be experts with hundreds of publications… hundreds of publications in poor-quality journals with reviewers who just don’t know their arses from their elbows.

Each and every study (in any field) has to be taken on its merits. Bits missing from the methods section which sets alarm bells ringing? They’re missing for a reason…

I am a scientist myself and the review process is notorious for not always weeding out bad publications. If one is well connected the chances are that your “anonymous” reviewers will be your friends is very high and will help to get your article published in well-respected journals even if it’s not solid.

Fallacy of experts is one of the biggest problems nowadays- having titles and awards does not equal being an amazing scientist

It would, perhaps, be fair to criticise Ray were you first to pick apart his arguments. However, a troll-like ad hominem attack doesn’t help.

As a scientist and owning a PhD title, it is really sad to see that Universities with have a good name are not able to use modern technology properly. Unfortunately, or what is even worse, the Journal is ‘peer review’ with an impact factor of 1 (low). So other people/profs/PhDs have read it and said “Nothing wrong with this”. I think this is an unfortunate development of the urge to publicate articles in order to show numbers rather than quality.

There are only two options:

1, you submit a Letter to the editor to the journal to tell the article is rubbish. But as I assume you are not having a PhD they just might wave you away (yes unfortunately the scientific publishing world is lots of politics and having the right friends nowadays).

2. You write your own article and find a prof/PhD who wants to publish it with you (on his costs).

And I think you will agree both need time and effort you are probably not wanting to invest, though it would make the world a tiny bit better.

Yes I am happy I was able to leave academia and working in a large healthcare tech company.

As always Ray, thanks for sharing your opinion.

A great comment. This is what shoud be done. I am happy to help. I have 200+ paperi published in peer review Forums over the years and i alko have a professorship. A good letter And commentary to publish would be really valuable. I have done research in this domain a lot And making such a bad science is so frustrating. Like giving up mission to find the truth for fast publication And high press coverage.

I am a scientific journal editor, and I would like to receive any feedback about any of the studies we publish, the editorial team can decide on the expertise of the writer, no PhD required! You should definitely write to the journal. Peer review is the least worst system and it screws up, I can almost guarantee the reviewer (s) on this weren’t experts in the tech, more likely the physiology. The journal editor needs to know for next time. If you want a hand writing a letter to the editor, get in touch. Chris

The thing is though, this could have been a study of literally anything. The technology is pretty irrelevant to most of the points Ray highlighted. Using the last data point in a minute for one watch and the average over a minute for another is just bad statistics and bad science and should have been caught and highlighted by any half competent high schooler. That isn’t PhD level stuff and it’s not domain specific stuff.

I agree that Ray’s input on the devices might be valuable to the scientific community, but his input on science, maths and method shouldn’t be necessary.

To my mind, this actually makes the scientific journals no better than (unpopular) blogs as they seem to be publishing too many papers which means there isn’t sufficient bandwidth to thoroughly review them. I suspect “peer review” these days means “had a quick read”. Are there still even consequences for rubber stamping a bad study in a journal?

I just like my Garmin 920 to give me the same number day after day.

So if I walk round the block and it reads 1000 steps, I just want it to read 1000 steps (or there about) every day.

This is 2017! Really, having humans wearing multiple devices for testing is crazy. A college with a plastic engineering dept could have built a 5 foot long robotic or artificial wrist and run internal wires to emulate heart beats picked up by optical wearables. A connected laptop could simulate an established 20 minute workout varying from 35bpm to 180 bpm. Strap the wearable, run the program and compare accuracy over the workout.

I think the challenge is that’s never real enough. For example in power meter testing, those sorts of tests (with dyno systems to simulate a human) are always easily passed. But it’s the nuances of the human body that trips up most sport devices – be it wearables or power meters.

I understand the inaccuracies involved with wearing two devices on the same wrist. Are there any concerns about differences when wearing one device on the left and one on the right?

Asking because I returned a new Garmin VivoSmart 3 when it gave really bad results with respect to walking and climbing stairs, both compared to the Fitbit Charge HR I was also wearing and “the actual experience”. Case in point: climbing 15 floors was counted by Fitbit as 15 floors, Garmin only counted 3 (or even less). Walking for 20 minutes: Fitbit was close, Garmin only recorded the last 4 minutes or even less. Fitbit was worn on the left (non-dominant) and Garmin on the right; both about 1″ above the wrist bone.

Yes and no (as to concerns).

No, there’s no concerns from the standpoint of wearing one per wrist. That’s totally fine, as neither impacts each other.

Yes though, from the standpoint that looking at steps (and sometimes but more rarely HR), there are often real differences between peoples movements on their left vs right hands (my right hand/arm doesn’t move much when I run, whereas my left does). Whether or not one decides that’s something companies should be able to account for varies, but it should at least be known.

I personally fall in the camp from a testing standpoint that it shouldn’t matter. The tech should be able to overcome those obstacles (and most can). At the same time, if someone is trying to increase accuracy on their own devices, then I’d look to troubleshoot things like switching wrists and placement within a given wrist/arm.

Interesting post, good analysis.

Another shift in steps count can come from the “right-handed” effect, thus, Polar for example, advises you to wear the watch in the non-dominant hand, otherwise it could count more steps and activity, since the right handed people move a lot this hand. An vice versa for left-handed.

This kind of thing happens more than you might imagine in sports science studies (direct experience). Lots of words on statistical analyses and complex procedures… but whacking great fatal flaws which are glossed over.

This is a great article, Ray – I completely agree. It seems like the researchers were quite sloppy about their work in a number of ways. The thing I find the most surprising is that Hastie is one of the authors of the paper. He’s quite a good statistician. I’m shocked.

A ouple of days ago I read another interesting comments in which the author, and I agree, said that is not correct to say that smart watch measure Heart Rate.

Heart Rate comes from ECG electrode and measures electrical activitiy, a smart watch don’t use electrodes e for this reason don’t show HR but Pulse Rate “1 minute averaged PR”.

Two points:

A) Heart rate, by every single medication definition is some variant of: “a measure of cardiac activity usually expressed as number of beats per minute”.

Nobody says that it has to be measured by ECG, or optical HR sensor, or strap, or other means. Implying otherwise would somehow imply that we couldn’t measure our heart rates before these technologies existed. Which of course is silly. It’s no different than someone implying that a digital image isn’t a photo.

B) “for this reason don’t show HR but Pulse Rate “1 minute averaged PR”.”

Simply no. Just factually inaccurate. Staring at a red stop sign and saying it’s a blue stop sign would be more factually accurate. Anyone who says the above is so far removed from understanding the technology they shouldn’t be studying it, or for that matter even using it. Like, step away from the watch type of don’t use it.

That’s all.

Hi,

there’s a difference between pulse and heart rate:

“Heart rate is the number of beats/minute that your heart contracts and pushes blood out of itself into your arteries.”

“Pulse is the wave of blood flow felt over your superficial arteries when the heart does this pumping”

“If your heart is strong and it is pumping out enough blood to create a palpable pulse, your pulse rate will be felt over one of these arteries, and it will be equal to your heart rate. If your heart is beating irregularly or weakly, your heart rate will be higher than your palpated pulse.”

None of which contradicts what I wrote above.

Hi! Could you please elaborate on that phenomena?

“The bigger issue here is them wearing two optical HR sensor devices per wrist (which they did on all participants). Doing so affects other optical HR sensors on that wrist. This is very well known and easily demonstrated, especially if one of the watches/bands is worn tightly. In fact, every single optical HR sensor company out there knows this, and is a key reason why none of them do dual-wrist testing anymore. It’s also why I stopped doing any dual wrist testing about 3 years ago for watches. One watch, one wrist. Period.”

Any reference to a paper showing the error rates and so on?

Thank you!

Kate

I’m not aware of any paper that covers this (though I haven’t looked), though it’s pretty common knowledge within the optical HR sensor industry/community. I’ve had discussions about it with Valencell, Garmin, FirstBeat, TomTom (+LifeQ), Mio, Philips, and others that I can’t remember off the top of my head.

Though, it’s not terribly hard to demonstrate the effect.

Hi All-

For those following along in the comments, I’ve placed an ‘Update 1’ section into the piece, discussing some new information on how the data collection for one model was significantly flawed. Details here: link to dcrainmaker.com

Cheers.

As a student conducting ongoing research on similar devices myself it’s great to see a critical evaluation of research protocols from somebody who knows the nuances of the wearable field.

One question I do have for you Ray, is do you have any advice on how best to access data that isn’t averaged over a one minute epoch? Fitbit’s intraday data averages by the minute for example, while I’ve yet to find a way to access detailed data from Garmin’s Vivosmart range. There’s a very good chance there’s an obvious answer to this question but I’ve yet to be able to find it. If you’ve any advice I’d love to hear it.

No good way for 24×7 data from my knowledge. I know Garmin was looking to add 24×7 data into Apple Health, which would have actually made it reasonably easy to export – though I haven’t looked closely to see if it’s doing that today.

On the non-good way, with Garmin the 24×7 data is uploaded into .FIT files. So you could technically keep those offline and then crack them open to download all the data. Shouldn’t be too heard to read it from a HR standpoint (and then cross-validate the numbers to ensure it’s decoded properly).

Finally, I know Garmin does help out various academic groups using devices from time to time (for studies). So it’s worthwhile reaching out to them and they’ll usually get you in touch with the right teams for accessing that kinda data.

Much appreciated Ray, thank you. The discrepancies you’ve highlighted in this article will certainly inform my research and hopefully that of many other scientists. Thanks again for the advice and for your continued contribution to this field.

A well-reasoned and well-written critique. However, I would point out that in spite of wearing the devices incorrectly, in spite of cobbling together different types of data and averages, and in spite of all the other problems that you cite, the devices did an adequate job of measuring heart rate, as compared with clinical-quality data taken at the same time. My conclusion is that it’s possible that the results for the bands are wildly off, the most likely result is that they all work pretty well at measuring heart rate.

Clearly, the same cannot be said of their calorie estimates.

At the more fundamental level, I agree that we need more testing of wearable health tech devices, and these tests must be rigorous, relevant, reliable, and repeatable. That takes a significant investment. I wrote for PC Magazine for more than 20 years, and was active in PC Mag Labs projects, so I have an understanding of the multi-million dollar investment required for infrastructure, test development and validation, and staff with relevant experience and skills. And that’s before you test the first product.

I see that my email does not get published with my comment. Anyone who would like to contact me can email me at apoor@verizon.net.

Alfred Poor

Twitter: @AlfredPoor

Google+: plus.google.com/+AlfredPoor

Facebook: facebook.com/alfred.poor

LinkedIn: linkedin.com/in/alfredpoor/

I didn’t notice if you addressed this, but have you considered uploading your data to the Device Validation Datahub link to precision.stanford.edu ? At least they would have correctly collected OHR data.

It looks like they’re looking for data in a certain protocol. It’s not one I use today. That said, all of my reviews do include all the original comparative data in their proper formats. So they’re more then welcome to use that data.

Just to say great work!

Do any of the Garmin OHR sensors support Heart Rate Variability (HRV) measurements? Are the sensors sufficiently precise? I use HRV for determining full recovery. The Garmin chest strap works, but I’m hoping the OHR varieties will also work.